We’ve written about using AWS Lambda in Boxer and are pretty happy with it. That said, we’ve been refining our Lambda scaling strategies as we’ve grown.

If you read user articles about AWS Lambda, that’s a common refrain. Lambda is good for getting going and amazing for some workloads, but it is not suited to everything. As we grow, we learn more about the latter.

What is AWS Lambda

Introduced in 2014, “AWS Lambda is an event-driven, server-less Function as a Service” addition to AWS. It started as a fairly minimal ‘run some code’ based on a trigger service and has grown into something much more complex since.

We’ve been big fans since our company’s founding, but as we get bigger and Boxer grows more complex, we’ve refined our Lambda scaling strategies across various AWS services.

How We use Lambda for Data Import

We’re a weather company that imports a lot of real-time weather data. This consists of radar (observed data) and models (future forecast data).

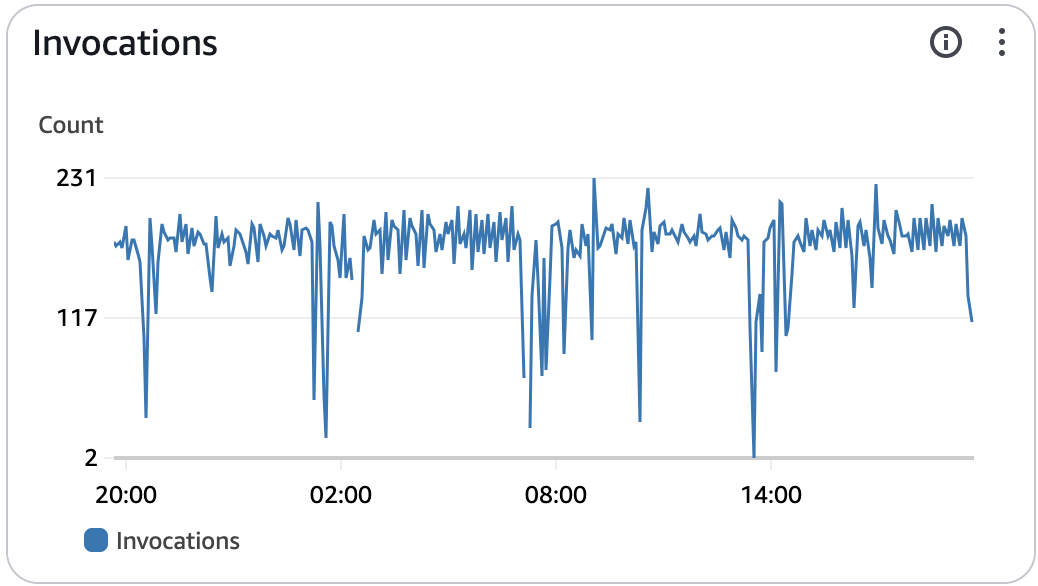

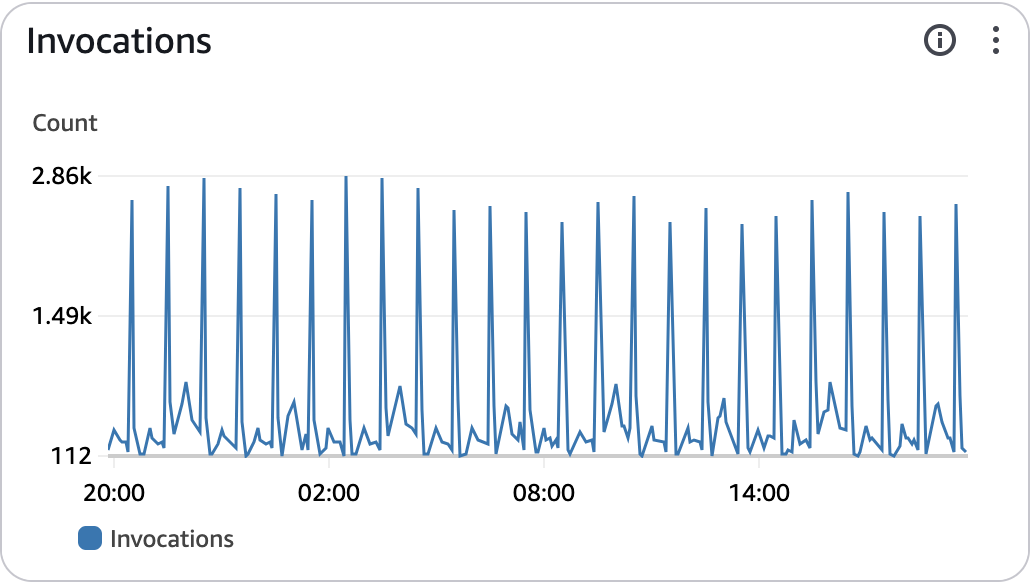

The radar data drops every few minutes, though if you look at the pattern, it acts like it’s more or less continuous with some breaks. Here’s an example of one of our radar feeds and the Lambda invocations over time.

It’s an interesting workload because you could move this to a few ec2 instances and get almost the same result, but you’d likely be trading a bit of latency. For radar, we care a lot about latency, so this one stays on Lambda.

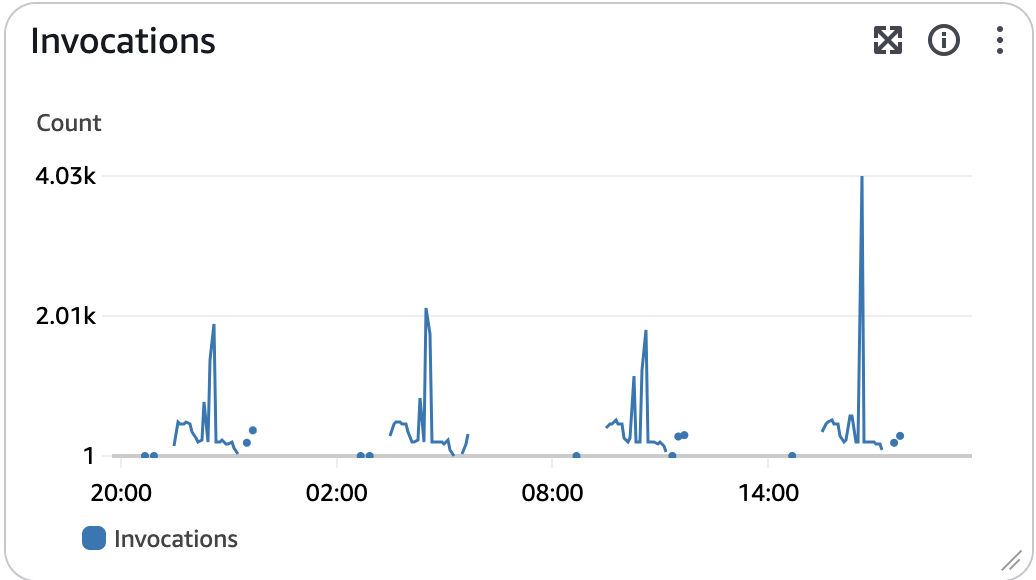

For models, developing proper Lambda scaling strategies gets more interesting. Here’s the pattern for our GFS importer. GFS is a big global model that runs 4 times a day.

We process those data drops in parallel… but do we really need to? We could afford a bit of latency here. On the other hand, it does not cost us very much for the importer. So these probably stay on Lambda, too.

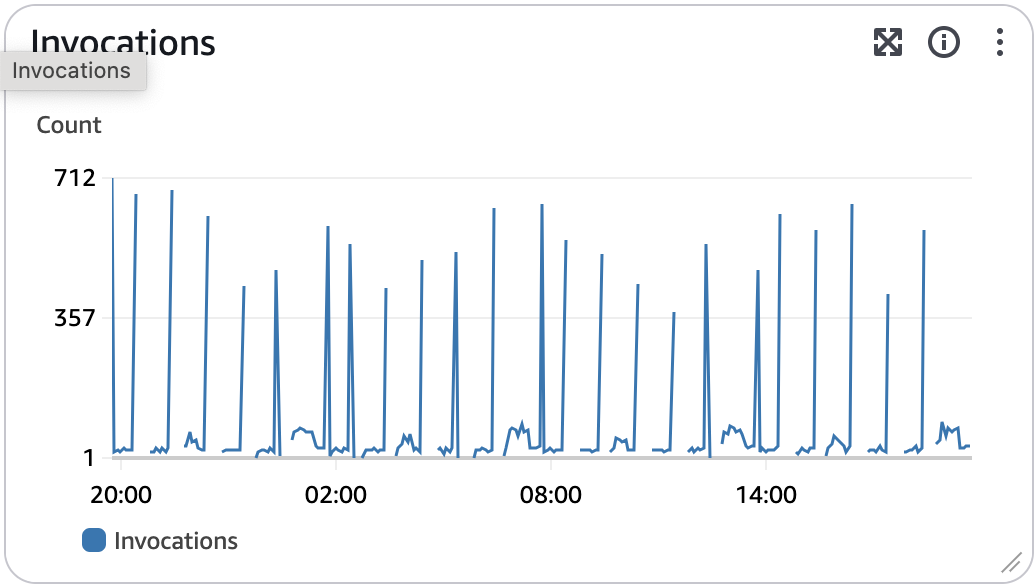

HRRR is a model over the US that drops every hour but for less data. We care more about latency here since the forecast is more timely.

That’s just for import. Once our importers run, a given data set is available for query by our users but not yet for visual display by Terrier or legacy endpoints like WMTS. That requires more work.

Lambda Scaling Strategies for Data Processing

Once data is imported into Boxer as Zarr, we kick off the rest of the processing necessary for display. This is lightweight for Terrier, as we’re not reprojecting anything. We chop up a given data set and store it in our own cloud-friendly format, update our global metadata, and we’re done.

We consolidated this work in the last update, so rather than do it on a per-importer basis (e.g., GFS or MRMS by itself), we add it to a big bucket of processing for all our data sources. This Lambda watches our s3 buckets for imported data and kicks off when it sees something new and that trends like so.

You can see the HRRR kicking off hourly, so this is probably still a good use of Lambda. Maybe. It really depends on the latency we’d be introducing by moving this processing to instances. We’ve done that now for legacy WMTS support.

Advanced Lambda Scaling Strategies: Moving to Instances

Processing our visuals for Terrier is very lightweight. It was designed that way. However, producing image tiles for legacy systems that need WMS or WMTS is much more work.

We have to reproject our imported data into the coordinate systems our customers need. That tends to be costly and uses a lot of memory. This is why Terrier is so much better, but you gotta do what you gotta do.

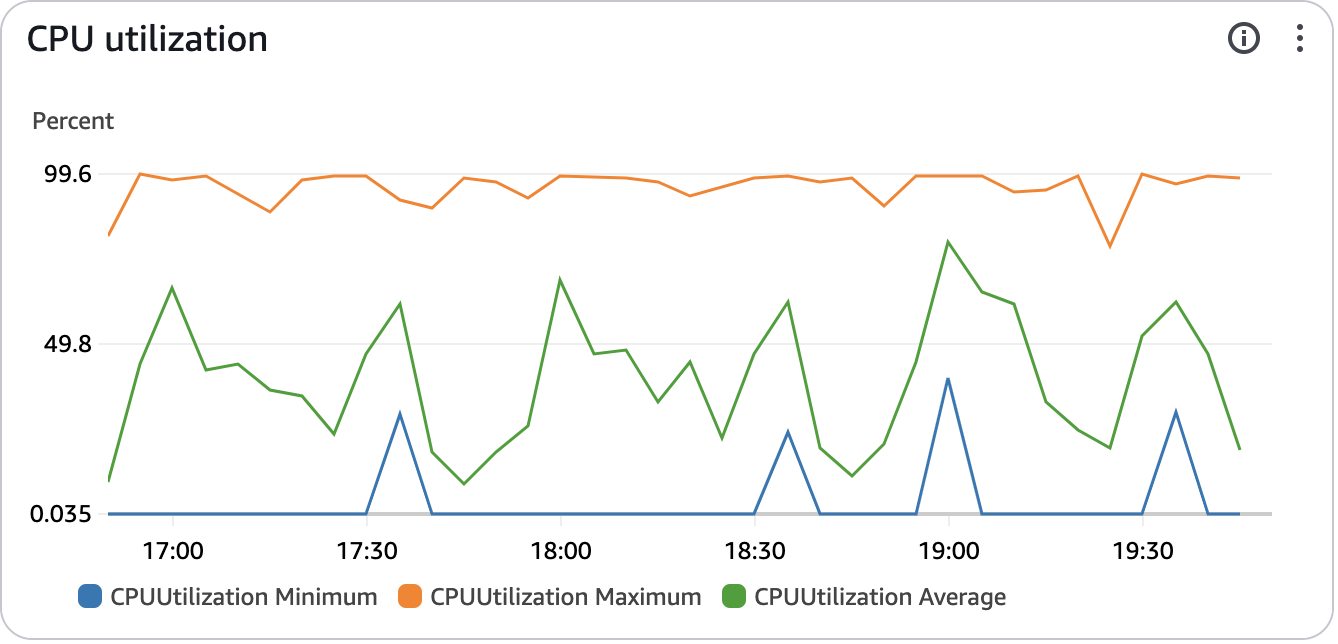

We found reprojection was extremely expensive on Lambda, so our Lambda scaling strategies evolved to move this workload to a Cluster of ec2 instances. They’re kept reasonably busy.

Traffic is still bursty, so we’ll continue tuning that.

I think we’ll also be moving some of our Terrier processing, particularly the GFS, over to a Cluster as well. It can afford a bit of latency for the cost.

Developing Flexible Lambda Scaling Strategies

Having used AWS Lambda for a few years, I don’t regret initially putting most of our work on it. Configuring containers for Lambda is easier than even Fargate or regular ec2 Instances. If you’re sloppy about memory, it’s even better.

Our Lambda scaling strategies have matured as we’ve grown and understood our workloads better. Some workloads are better suited to fixed instances. They’re certainly cheaper that way. But we’ll keep Lambda for the importers and the most latency-dependent workloads.